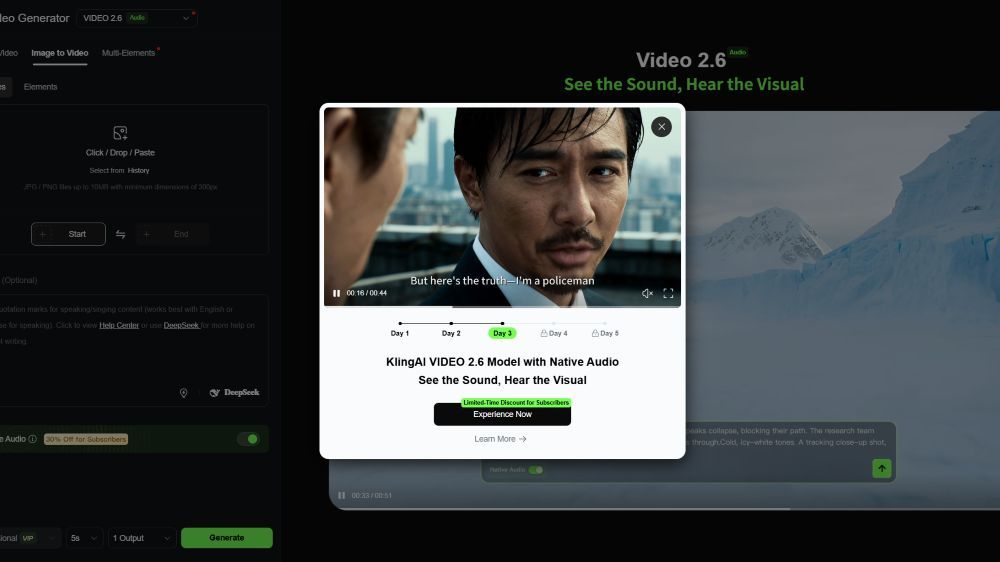

Kling AI VIDEO 2.6 Review: A Leap in Audio-Visual AI Generation

Overview

Kling AI's VIDEO 2.6 marks a significant advancement in AI-generated video by introducing native audio synthesis, a feature that sets it apart from current industry standards. Unlike traditional workflows that require separate audio post-processing (e.g., Runway ML, Pika Labs), VIDEO 2.6 generates synchronized audio-visual content end-to-end, promising a more cohesive creative experience.

Key Innovations

1. Native Audio Generation

- Breakthrough: Eliminates the need for manual audio dubbing or sound effect layering. Competitors like Sora (OpenAI) and Stable Video Diffusion still focus primarily on silent video generation, requiring third-party tools (e.g., ElevenLabs, Adobe Premiere) for audio.

- Technical Edge: Deep alignment of audio semantics with visual motion (e.g., footsteps syncing with walking animations) addresses the "uncanny valley" effect common in AI-generated content.

2. Audio Quality & Diversity

- Multi-Type Sound Support: Human voices, action SFX, and ambient sounds are generated with "cleaner quality" and "richer layers." This rivals HeyGen (voice-centric) and Descript but surpasses them in environmental sound integration.

- Professional-Grade Output: Claims to meet pro creator standards, though independent tests are needed to compare with dedicated audio tools like Audo.ai.

3. Semantic Understanding

- Contextual Coherence: Enhanced interpretation of complex prompts (e.g., narratives with emotional arcs) could position it ahead of Pika 1.0, which struggles with long-form consistency.

- User Intent Alignment: Similar to Google’s Veo, but with added audio context, reducing the "misinterpretation gap" in creative workflows.

Competitive Landscape Comparison

| Feature | Kling VIDEO 2.6 | Runway Gen-2 | Pika 1.0 | Sora (OpenAI) |

|---|---|---|---|---|

| Native Audio | ✅ End-to-end | ❌ Manual editing | ❌ Silent clips | ❌ Silent clips |

| AV Sync | Deeply aligned | Basic lip-sync | Minimal | N/A |

| Sound Types | Voice, SFX, ambient | Voiceovers only | N/A | N/A |

| Prompt Complexity | High (narratives) | Moderate (short clips) | Low (3-sec clips) | High (longer scenes) |

Industry Implications

- Workflow Disruption: VIDEO 2.6 challenges the "generate-then-edit" paradigm, potentially reducing production time for social media ads, indie films, and game prototyping.

- Gaps to Address:

- Real-World Testing: Claims of "professional-grade audio" need validation against tools like Adobe Audition.

- Customization: Can users fine-tune audio parameters (e.g., voice pitch, SFX volume)? Competitors like ElevenLabs offer granular control.

- Market Position: Targets a niche between all-in-one suites (e.g., Canva’s AI video) and specialized tools (e.g., Midjourney + Boomy). Its success hinges on balancing ease-of-use with advanced features.

Verdict

Kling AI’s VIDEO 2.6 is a pioneering but unproven contender. Its native audio integration could redefine AI video generation if it delivers on:

- Consistency (avoiding "glitchy" sync in long clips),

- Accessibility (pricing tiers, hardware requirements), and

- Community Adoption (plugins for Premiere Pro/Final Cut).

For now, it’s a promising alternative for creators prioritizing speed over granular control, but the broader market may wait for v3.0 before fully transitioning from established tools.