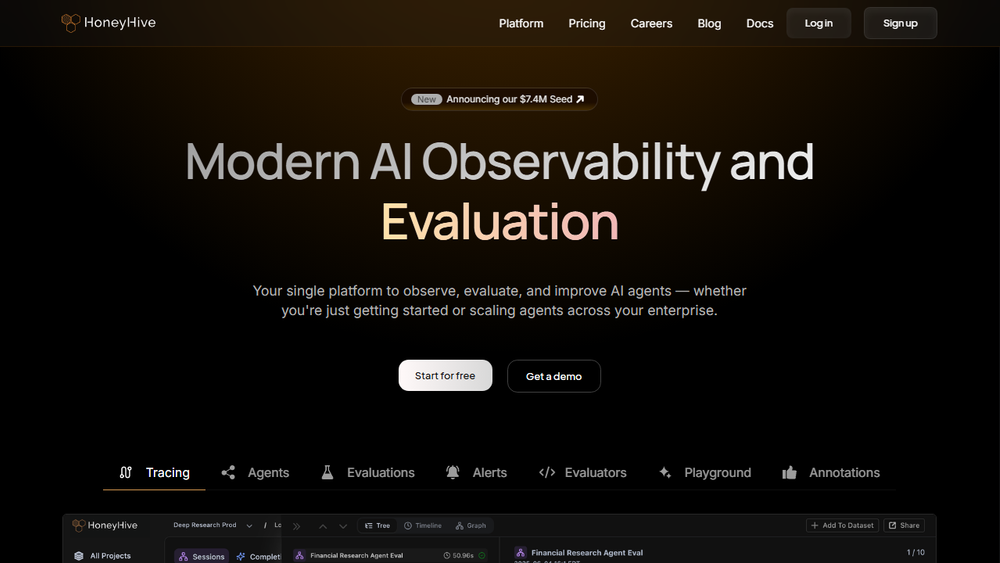

HoneyHive is a modern AI observability and evaluation platform designed to empower enterprises to confidently scale AI agents in production. It offers a comprehensive solution for the entire Agent Development Lifecycle (ADLC), ensuring that AI agents are trustworthy and reliable by design.

Core Features:

- AI Observability: Gain end-to-end visibility into your AI agents' performance across the enterprise. Analyze underlying logs to debug issues faster and understand agent behavior in real-time. This includes features like OpenTelemetry-native ingestion, session replays, and rich graph and timeline visualizations of agent steps.

- AI Evaluation: Systematically measure AI quality with robust evaluation tools. Run experiments offline against large datasets to identify regressions before they impact users. HoneyHive supports online evaluation using LLM-as-a-judge or custom code, and allows domain experts to grade outputs through annotation queues.

- Monitoring & Alerting: Continuously monitor key metrics such as cost, latency, and quality at every step of the agent's operation. Get real-time alerts for critical AI failures and drift detection. The platform offers custom charts for tracking KPIs and advanced filtering/grouping for in-depth data analysis.

- Artifact Management: Facilitate collaboration between domain experts and engineers with a centralized platform for managing prompts, tools, datasets, and evaluators. This includes version management, Git integration for prompt deployment, and a collaborative IDE for prompt management.

- Open Standards: HoneyHive is built with OpenTelemetry-native support, allowing for seamless integration with existing observability ecosystems and ensuring flexibility and extensibility.

Target Users:

HoneyHive is designed for enterprises, from startups to Fortune 100 companies, that are developing and deploying AI agents. This includes:

- AI Engineers: To monitor, debug, and optimize the performance of their AI agents.

- MLOps Engineers: To ensure the reliability, scalability, and trustworthiness of AI agents in production environments.

- Data Scientists: To evaluate the quality and performance of AI models and agents.

- Product Managers: To understand user impact and identify areas for improvement.

- Domain Experts: To contribute to the evaluation and annotation process, ensuring AI outputs align with business requirements.

Key Benefits:

- Confidently Scale Agents: Deploy and manage AI agents in production with peace of mind.

- Ensure Trustworthiness: Build reliable and predictable AI systems.

- Accelerate Development: Debug and optimize agents faster with comprehensive observability.

- Improve AI Quality: Continuously evaluate and enhance agent performance.

- Foster Collaboration: Enable seamless teamwork between engineering and domain expertise.

The platform's commitment to open standards and a collaborative approach makes it a powerful tool for organizations looking to harness the full potential of AI agents.